If you work in IT and are responsible for backing up or transferring large amounts of data, you’ve probably heard the term data Deduplication. Data are an important asset to every business. Day by day the growth of data is exponentially increasing and managing the tremendous amount of data by storing them in physical storage requires a highly scalable storage solution. There are few techniques like Compression, Deduplication that plays a vital role in using the storage efficiently. In the last few years, Data Deduplication is becoming one of the mainstream technology for effectively storing the data.

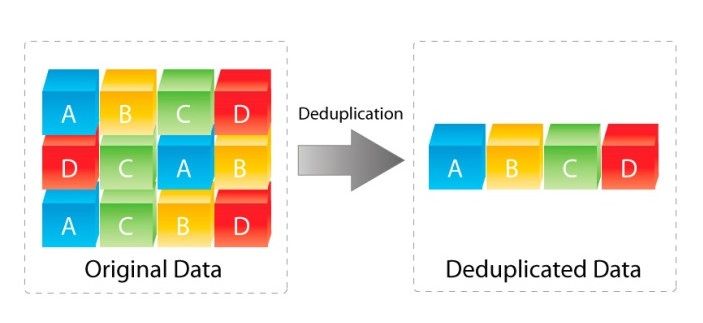

At its simplest definition, This process of eliminating redundancy is known as data Deduplication.

Deduplication vs. Compression

Deduplication is sometimes confused with compression, another technique for reducing storage requirements. While deduplication eliminates redundant data, compression uses algorithms to save data more concisely. Some compression is lossless, meaning that no data is lost in the process, but "lossy" compression, which is frequently used with audio and video files, actually deletes some of the less-important data included in a file in order to save space. By contrast, deduplication only eliminates extra copies of data; none of the original data is lost. Also, compression doesn't get rid of duplicated data -- the storage system could still contain multiple copies of compressed files.

Deduplication often has a larger impact on backup file size than compression. In a typical enterprise backup situation, compression may reduce backup size by a ratio of 2:1 or 3:1, while deduplication can reduce backup size by up to 25:1, depending on how much duplicate data is in the systems. Often enterprises utilize deduplication and compression together in order to maximize their savings

Types of Deduplication:

Commonly used Deduplication methods are: File level & Block level

File Level Data Deduplication:

File Level Data Deduplication eliminates redundant files. This approach is very simple and fast, but the ratio of Deduplication is very small. There might be redundant data in files, which file level Deduplication cannot find.

Block Level Data Deduplication :

In block level Deduplication, files are chunked into blocks of fixed size or variable size. This process is called chunking. These blocks are then Deduplicated. The Deduplication percentage is very high in Block-Level Deduplication when compared to file level Deduplication since there is more number of blocks which contain redundant data leading to more Deduplication. There are some block-level Deduplication approaches, one of them is Fixed-size chunking approach. It uses Fixed length block size to find duplicates. The other approach is Variable-size chunking approach. This approach divides the file into variable length data chunks to find duplicates. It is one of the most widely used approaches to Deduplicate data since it has higher Deduplication percentage.

Methods of Deduplication: (Client & Server side)

Client-side Deduplication:

Divides data stream into chunks of data to eliminates duplicate data at the client side before being transferred to the server. It decreases the amount of data transmitted to the server.

Server-side Deduplication:

Eliminates duplicate data from a data stream before it is stored in a server which improves the Storage Utilization by storing only the original data.

Drawbacks and concerns:

One method for deduplicating data relies on the use of cryptographic hash functions to identify duplicate segments of data. If two different pieces of information generate the same hash value, this is known as a collision. The probability of a collision depends upon the hash function used, and although the probabilities are small, they are always non zero. Thus, the concern arises that data corruption can occur if a hash collision occurs, and additional means of verification are not used to verify whether there is a difference in data, or not. Both in-line and post-process architectures may offer bit-for-bit validation of original data for guaranteed data integrity.The hash functions used include standards such as SHA-1, SHA-256 and others.

The computational resource intensity of the process can be a drawback of data deduplication. To improve performance, some systems utilize both weak and strong hashes. Weak hashes are much faster to calculate but there is a greater risk of a hash collision. Systems that utilize weak hashes will subsequently calculate a strong hash and will use it as the determining factor to whether it is actually the same data or not. Note that the system overhead associated with calculating and looking up hash values is primarily a function of the deduplication workflow. The reconstitution of files does not require this processing and any incremental performance penalty associated with re-assembly of data chunks is unlikely to impact application performance.

Another concern is the interaction of compression and encryption. The goal of encryption is to eliminate any discernible patterns in the data. Thus encrypted data cannot be deduplicated, even though the underlying data may be redundant.

Although not a shortcoming of data deduplication, there have been data breaches when insufficient security and access validation procedures are used with large repositories of deduplicated data. In some systems, as typical with cloud storage,[citation needed] an attacker can retrieve data owned by others by knowing or guessing the hash value of the desired data.